音视频数据采集及单向传输的实现(海思3516EV200平台)

文章目录

前不久接到一个项目,需求是将摄像头拍摄的监控视频通过激光传输到另外一个终端上。因为传输只能是单向的,终端只能被动接收数据并显示。接到这个项目笔者也有些为难,现行的视频传输都是双向通信,虽然是摄像头不断发送数据,但是还是有控制帧的存在的,链路变成单向传输,则视频马上就会中断,所以必须要实现一个新的通信协议和物理模型来实现。

1.硬件选型

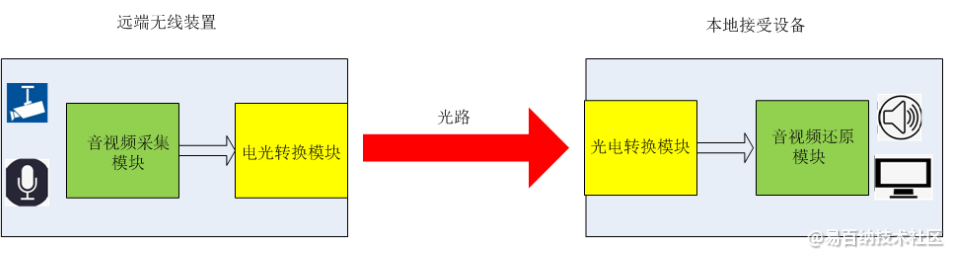

项目的物理结构图如下图所示:

1.1远端无线装置

要求面积小,厚度薄,功耗低,可电池供电,属于微小型装置

1.1.1音视频采集模块

- 采集摄像头视频和麦克风音频,压缩成H.264(包含音视频)视频图像为1080P,30FPS;音频采样率和位宽尽量高,以提高声音采集质量;

- 摄像头采用数字接口,暂定为MIPI接口;

- 麦克风为模拟信号;

- 通过USB转串口与光电转换模块对接,将H.264/H.265码流发出;

- 此模块处理平台暂定选择HI3516EV200,面积小,功耗低,有现成开发板可供调试。板子有摄像头、MIC、USB、网络,可以完成项目原型验证。

1.1.2光电转换模块

- 将音频采集模块的串行码流转换为激光,发送给接收方

- 发送速率可达百兆比特(100Mbps)

1.2本地接受设备

1.2.1光电转换模块

接收远端装置发送的激光,转换为串口转USB的电学信号传给音视频还原模块

1.2.2音视频还原模块

- 接收光电转换模块传递的信号

- 解码H264/H.265,通过LCD还原图像,通过扬声器、耳机还原声音

- 此平台处于本地接收端,可以选用较大的处理器

这里USB转串口模块选择FT4232H模块,该模块RS232/RS422/RS485串口传输数据速率高达12M。完全满足项目需求。

2.串口配置

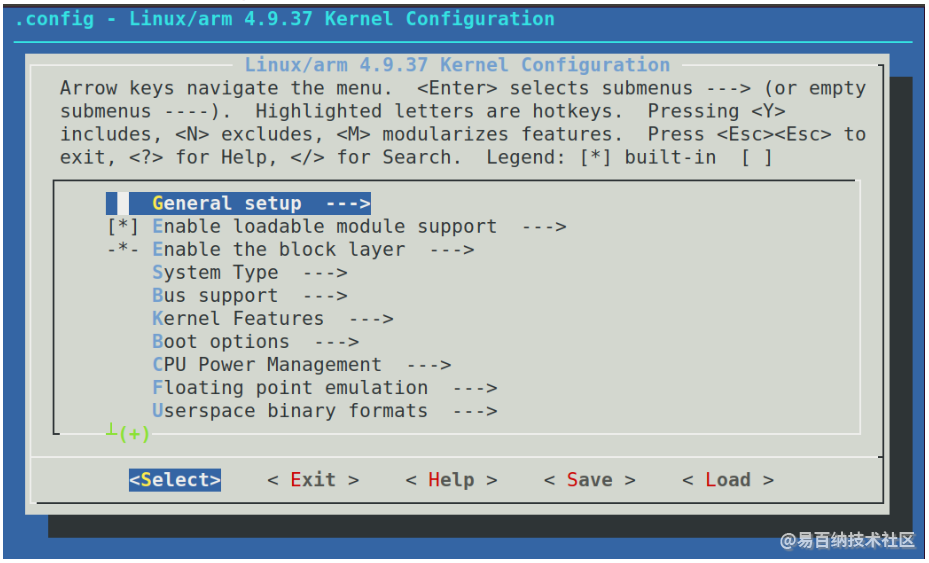

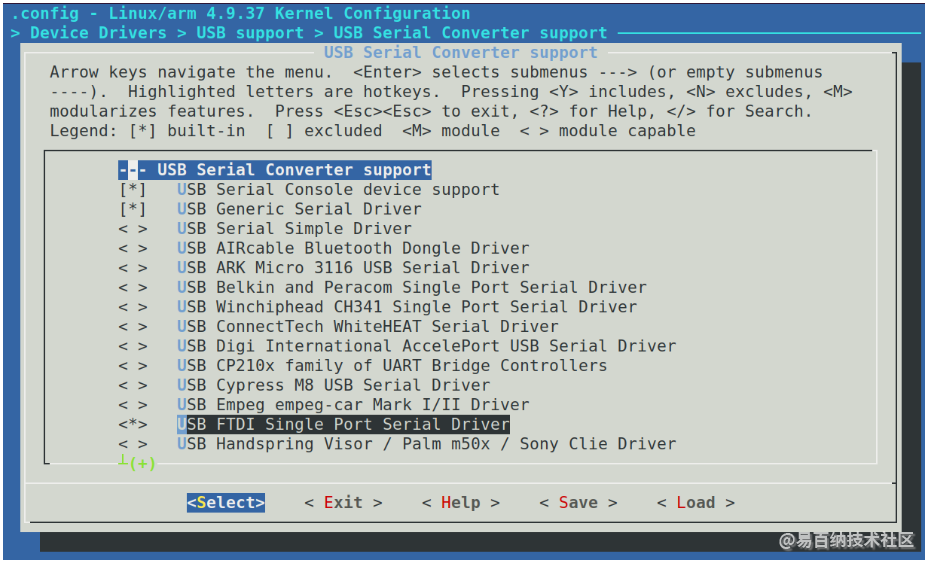

海思提供SDK默认不支持FT4232H串口,需要修改内核配置响应驱动,配置方法如下:

- 进入内核目录:cd linux-4.9.y/

- 打开内核配置:make menuconfig

- 进入驱动配置

- 保存配置并编译内核

make clean

make uImage

cp ./arch/arm/boot/uImage ./uImage.3516ev200

添加FTDI驱动后,我们需要在编码的时候配置串口的速率,可以参考如下代码:

// 设置为特诉波特率,比如200000

int set_speci_baud(int fd, int baud)

{

struct serial_struct ss, ss_set;

struct termios Opt;

tcgetattr(fd, &Opt);

cfsetispeed(&Opt, B38400);

cfsetospeed(&Opt, B38400);

tcflush(fd, TCIFLUSH);/*handle unrecevie char*/

tcsetattr(fd, TCSANOW, &Opt);

if((ioctl(fd, TIOCGSERIAL, &ss)) < 0)

{

printf("BAUD: error to get the serial_struct info:%s\n", strerror(errno));

return -1;

}

ss.flags = ASYNC_SPD_CUST;

ss.custom_divisor = ss.baud_base / baud;

if((ioctl(fd, TIOCSSERIAL, &ss)) < 0)

{

printf("BAUD: error to set serial_struct:%s\n", strerror(errno));

return -2;

}

ioctl(fd, TIOCGSERIAL, &ss_set);

printf("BAUD: success set baud to %d,custom_divisor=%d,baud_base=%d\n",

baud, ss_set.custom_divisor, ss_set.baud_base);

return 0;

}

串口的速率大小可以设置范围包括:12000000、8000000、6000000、4000000、3000000、2000000、1000000……。速率大小必须是24000000的约数,即速率大小必须能整除24000000。

3.音视频采集

视频采集通过修改海思的示例代码rtsp实现,程序基本流程为:

- 软件初始化,设置好音视频的格式、初始化数据队列;

- 打开usb串口,设置波特率;

- 创建视频和音频采集线程;

- 循环读取音视频队列,有数据就通过串口发送出去。

示例代码:

while(init_serial() < 0)

{

sleep(1);

}

set_speci_baud(serial_fd, nRate);

signal(SIGINT, SAMPLE_VENC_HandleSig);

signal(SIGTERM, SAMPLE_VENC_HandleSig);

SAMPLE_AUDIO_RegisterVQEModule();

pthread_create(&id,NULL,SAMPLE_VENC_1080P_CLASSIC,NULL);

//显示 时间,名称和logo

osdinit(pszName);

sleep(1);

pthread_create(&audio,NULL,SAMPLE_AUDIO_CLASSIC,NULL);

while(1)

{

pn= GetStreamInfoFromQueue();

if(pn != NULL)

{

pInfo = (pStreamInfo)pn->pdata;

head.id = htons(nID);

head.type = pInfo->type;

head.len = htons(pInfo->len);

head.pts = htonll(pInfo->u64TimeStamp);

head.crc16 = htons(GetCCITTCrc((unsigned char*)pInfo->buf, pInfo->len));

uart_send(serial_fd, &head, sizeof(CStreamHead));

uart_send(serial_fd, pInfo->buf, pInfo->len);

RealseStreamInfo2Pool(pn);

lSendCount++;

}

usleep(1);

}

在视频采集线程(SAMPLE_COMM_VENC_GetVencStreamProc函数)中,将接收到的视频数据加入到队列中,具体代码很简单,这里不列举了。

在音频采集线程(SAMPLE_COMM_AUDIO_AencProc函数)中,将接收到的音频数据加入到队列中,插入队列前,要去掉数据的前4个字节,因为这4个字节是海思的音频数据头。

好了,发送端的代码改动量很小,很容易实现。将编译好的软件放到文件系统的bin目录下,然后在rcS中添加一行启动软件命令就可以实现板子上电后直接开始发送数据了。

另外需要注意的时例程中在void SAMPLE_VENC_1080P_CLASSIC(void p)函数中,去掉下面的代码,否则自启动会报错:

printf("please press twice ENTER to exit this sample\n");

getchar();

getchar();

替换为:

while(1)

{

sleep(1);

}

4.音视频接收与显示

因为是单向传输数据,所以我们没办法用开源的工具来显示音视频数据了,所以我们得自己实现音视频的接收、显示与存储。

笔者是老古董了,最拿手的就是c++,所以使用MFC+SDL+ffmpeg实现。

思路就是创建串口接收线程,数据分析线程,保存线程,视频播放线程,音频播放线程。

串口接收线程主要通过串口接收数据,并抛给数据分析线程来处理。

//循环读取串口

while(m_nTheradStatus == THREAD_RUNNING)

{

pBuffer = g_dbRecvBuffer.GetBuffer();

//获得缓冲数据后,开始读取数据

if (pBuffer != NULL && pBuffer->m_dwHead == RECV_BUF_HEAD)

{

pszBuffer = pBuffer->m_pszBuffer;

READ://读取失败时,从这里继续读取,而不是释放内存

//读取数据

nSize = m_pSerial->read(pszBuffer, RECV_SIZE);

//读到数据了,添加到队列中

if(nSize > 0)

{

m_lDataCount += nSize;

//太大就忽略。

pBuffer->m_nLen = nSize > RECV_SIZE ? RECV_SIZE:nSize;

//添加独立

g_dbRecvList.AddData(pBuffer);

}

else

{

//继续读

goto READ;

}

}

else

{

TRACE("pBuffer != NULL && pBuffer->m_dwHead == RECV_BUF_HEAD\n");

}

}

数据分析线程主要是将数据分包为音频和视频数据,加入到响应的播放队列:

while(m_nTheradStatus == THREAD_RUNNING)

{

pBuffer = g_dbRecvList.GetData();

if (pBuffer != NULL && pBuffer->m_nLen <= RECV_SIZE && pBuffer->m_dwHead == RECV_BUF_HEAD)

{

nCopyCount = pBuffer->m_nLen > STREAM_BUF_DLEN-nLen ? STREAM_BUF_DLEN-nLen : pBuffer->m_nLen;

//memset(pszBuffer+nLen, 0x00, STREAM_BUF_DLEN-nLen);

memcpy(pszBuffer+nLen, pBuffer->m_pszBuffer, nCopyCount);

nLen += nCopyCount;

pBuffer->Clear();

g_dbRecvBuffer.ReleaseBuffer(pBuffer);

//BX

char* p = pszBuffer;

int size = nLen;

while (size != 0)

{

//判断是否时信息头

if(p[0] == '$' && p[1] == '^' && p[2] == '$')

{

//如果大小过小,要稍后再读取

if (size < sizeof(CStreamHead))

{

memmove(pszBuffer, p, size);

nLen = size;

break;

}

//解析信息头

CStreamHead * pHead = (CStreamHead *)p;

pIndex = p+sizeof(CStreamHead);

//信息大小

int nDateLen = ntohs(pHead->len);

//是否已全部读取完毕

if(size >=(int) (nDateLen+sizeof(CStreamHead)))

{

//this->ParseRtpRtcp(channel, (uint8_t*)p, len);

//一个完整包,开始处理。

m_dwStreamCount++;

unsigned short crc16 = GetCCITTCrc((UCHAR*)(pIndex), nDateLen);

//前面的数据会忽略丢包和误码计算

if(nTimes < 1000)

{

nTimes++;

}

//判断校验和,判断是否误码

if(crc16 != ntohs(pHead->crc16))

{

if(nTimes > 10)

{

m_dwWrongCount++;//CRC error

}

//可能存在中间丢包的情况。

int nCount = nDateLen;

while (nCount > 0)

{

//倒查是否存在信息头

if(pIndex[nCount] == '$'

&& pIndex[nCount+1] == '_'

&& pIndex[nCount+2] == '$')

{

break;

}

nCount--;

}

//还真存在,转移指针

if (nCount > 0)

{

nDateLen = nCount;

}

}

//序列号

wCurID = ntohs(pHead->id);

//判断是否丢帧了

if(nTimes > 100)

{

//是否丢包

if(wLastID == 0xFFFF && wCurID == 0)

{

wLastID = 0;

}

else if(wLastID == 0xFFFF && wCurID != 0)

{

m_dwLossCount+=wCurID;

}

else if(wCurID < wLastID)

{

m_dwLossCount+=((0xFFFF-wLastID)+wCurID);

}

else if (wLastID+1 != wCurID)

{

m_dwLossCount +=(wCurID-wLastID-1);

}

}

//最后的帧序号

wLastID = wCurID;

//将本帧数据添加到播放队列中,进行播放

pPlayBuffer = g_dbPlayBuffer.GetBuffer();

if (pPlayBuffer != NULL)

{

memcpy(pPlayBuffer->m_pszBuffer, pIndex, nDateLen);

pPlayBuffer->m_nLen = nDateLen;

pPlayBuffer->m_nType = pHead->type;

pPlayBuffer->m_dwTimePoint = GetTickCount();

pPlayBuffer->m_llPts = ntohll(pHead->pts);

//判断音频还是视频

if(pPlayBuffer->m_nType <= TYPE_ADUIO_CLASS)

{

g_dbAudioList.AddData(pPlayBuffer);

}

else

{

g_dbVedioList.AddData(pPlayBuffer);

}

}

//后续

p += nDateLen+sizeof(CStreamHead);

size -= (nDateLen+sizeof(CStreamHead));

memmove(pszBuffer, p, size);

nLen = size;

break;

}

else

{

//转移内存位置

memmove(pszBuffer, p, size);

nLen = size;

break;

}

}

p++;

size--;

//

if (size < sizeof(CStreamHead))

{

memmove(pszBuffer, p, size);

nLen = size;

break;

}

}

}

else

{

//没有获取到数据的时候,休息

Sleep(10);

}

}

视频播放线程是使用SDL来显示视频数据:

int CVedioThread::Run(void)

{

FFmpeg/

AVCodecContext* pCtx; //解码器的配置信息

AVPacket* packet; //未解码的数据

AVFrame* pFrame; //解码后的视频帧

AVFrame* pFrameYUV; //用于显示的视频帧 经过缩放了

struct SwsContext* mSwsContext; //显示时转换信息 指向H265或者H264

AVCodecID nCodecID = AV_CODEC_ID_HEVC;//H.265

//H265相关

AVCodec* pCodec; //解码器

uint8_t* pbVedioOutBuf; //用于显示的数据

int nVOBSize; //显示数据的大小

int nWidth = 1920;

int nHeight = 1080;

int nShowWidth = 1920;

int nShowHeight = 1080;

m_bExit = FALSE;

/FFmpeg///

//初始化

pFrame = av_frame_alloc();

packet = av_packet_alloc();

pFrameYUV = av_frame_alloc();

av_init_packet(packet);

mSDL_Window = SDL_CreateWindowFrom(m_hWnd);

mSDL_Renderer = SDL_CreateRenderer(mSDL_Window, -1, 0);

SDL_GetWindowSize(mSDL_Window, &nShowWidth, &nShowHeight);

TRACE("mSDL_Window W:%d,H:%d\n", nShowWidth, nShowHeight);

CPlayBuffer* pPlayBuffer = g_dbVedioList.GetData();

//直到能获取数据

while (pPlayBuffer == NULL && m_nTheradStatus == THREAD_RUNNING)

{

Sleep(1);

pPlayBuffer = g_dbVedioList.GetData();

}

if (m_nTheradStatus != THREAD_RUNNING)

{

goto FREE;

}

//根据第一个包确定解码器和画面大小

switch (pPlayBuffer->m_nType)

{

case TYPE_H265_240P:

{

nWidth = 360;

nHeight = 240;

nCodecID = AV_CODEC_ID_HEVC;//H.265

break;

}

case TYPE_H265_360P:

{

nWidth = 640;

nHeight = 360;

nCodecID = AV_CODEC_ID_HEVC;//H.265

break;

}

case TYPE_H265_480P:

{

nWidth = 720;

nHeight = 480;

nCodecID = AV_CODEC_ID_HEVC;//H.265

break;

}

case TYPE_H265_720P:

{

nWidth = 1280;

nHeight = 720;

nCodecID = AV_CODEC_ID_HEVC;//H.265

break;

}

case TYPE_H265_1080P:

{

nWidth = 1920;

nHeight = 1080;

nCodecID = AV_CODEC_ID_HEVC;//H.265

break;

}

case TYPE_H265_1944P:

{

nWidth = 2688;

nHeight = 1944;

nCodecID = AV_CODEC_ID_HEVC;//H.265

break;

}

case TYPE_H264_240P:

{

nWidth = 360;

nHeight = 240;

nCodecID = AV_CODEC_ID_H264;//H.264

break;

}

case TYPE_H264_360P:

{

nWidth = 640;

nHeight = 360;

nCodecID = AV_CODEC_ID_H264;//H.264

break;

}

case TYPE_H264_720P:

{

nWidth = 1280;

nHeight = 720;

nCodecID = AV_CODEC_ID_H264;//H.264

break;

}

case TYPE_H264_1080P:

{

nWidth = 1920;

nHeight = 1080;

nCodecID = AV_CODEC_ID_H264;//H.264

break;

}

case TYPE_H264_1944P:

{

nWidth = 2688;

nHeight = 1944;

nCodecID = AV_CODEC_ID_H264;//H.264

break;

}

default:

{

nWidth = 1920;

nHeight = 1080;

nCodecID = AV_CODEC_ID_HEVC;//H.265

}

}

//H.265解码器上下文

pCtx = avcodec_alloc_context3(NULL);

pCtx->width = nWidth;

pCtx->height = nHeight;

pCtx->codec_type = AVMEDIA_TYPE_VIDEO;

pCtx->codec_id = nCodecID;

pCtx->bit_rate_tolerance = 4000000;

pCtx->pix_fmt = AV_PIX_FMT_YUVJ420P;

pCtx->color_primaries = AVCOL_PRI_BT709;

pCtx->color_trc = AVCOL_TRC_BT709;

pCtx->colorspace = AVCOL_SPC_BT709;

pCtx->color_range = AVCOL_RANGE_JPEG;

pCodec = avcodec_find_decoder(pCtx->codec_id);

if (avcodec_open2(pCtx, pCodec, NULL) != 0)

{

AfxMessageBox(IDS_OPEN_DECODE_FAILED);

goto FREE;

}

//SDL

mSwsContext = sws_getContext(pCtx->width, pCtx->height

, pCtx->pix_fmt, nShowWidth, nShowHeight

, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

nVOBSize = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, nShowWidth, nShowHeight, 1);

pbVedioOutBuf = (uint8_t *)av_malloc(nVOBSize);

av_image_fill_arrays(pFrameYUV->data, pFrameYUV->linesize, pbVedioOutBuf

, AV_PIX_FMT_YUV420P, nShowWidth, nShowHeight, 1);

mSDL_Texture = SDL_CreateTexture(mSDL_Renderer, SDL_PIXELFORMAT_IYUV

, SDL_TEXTUREACCESS_STREAMING, nShowWidth, nShowHeight);

int nSleep = 0; //休息时间

m_dwStartTime = GetTickCount();

while (m_nTheradStatus == THREAD_RUNNING)

{

if(pPlayBuffer != NULL)

{

//goto RELEASE_BUF;

//构造packet,因为只是赋值指针,不用释放了

packet->data = (UCHAR*)pPlayBuffer->m_pszBuffer;

packet->size = pPlayBuffer->m_nLen;

packet->stream_index = 0;

if (pPlayBuffer->m_dwSleepTime > 0 && m_nTheradStatus == THREAD_RUNNING)

{

nSleep = pPlayBuffer->m_dwTimePoint - (GetTickCount() - m_dwStartTime);

TRACE("Play vedio sleep = %d\n", nSleep);

if (nSleep > 0)

{

Sleep(nSleep);

}

}

//用av_packet_from_data会有内存泄漏

//av_packet_from_data(packet, (uint8_t*), pPlayBuffer->m_nLen);

int nRet = 0;

try

{

//解码

nRet = avcodec_send_packet(pCtx, packet);

if (nRet != 0)

{

goto RELEASE_BUF;

}

if (avcodec_receive_frame(pCtx, pFrame) != 0)

{

goto RELEASE_BUF;

}

}

catch (...)

{

goto RELEASE_BUF;

}

if (m_bSave)

{

g_dbSaveList.AddData(pPlayBuffer);

}

else

{

pPlayBuffer->Clear();

g_dbPlayBuffer.ReleaseBuffer(pPlayBuffer);

}

//显示

sws_scale(mSwsContext, pFrame->data, pFrame->linesize, 0, pCtx->height, pFrameYUV->data, pFrameYUV->linesize);

nRet = SDL_UpdateTexture(mSDL_Texture, NULL, pFrameYUV->data[0], pFrameYUV->linesize[0]);

if (nRet < 0)

{

AfxMessageBox(_T("error!"));

}

//ShowText(0, 0, 0, 0, mSDL_Window);

nRet = SDL_RenderClear(mSDL_Renderer);

if (nRet < 0)

{

AfxMessageBox(_T("error!"));

}

nRet = SDL_RenderCopy(mSDL_Renderer, mSDL_Texture, NULL, NULL);

if (nRet < 0)

{

AfxMessageBox(_T("error!"));

}

SDL_RenderPresent(mSDL_Renderer);

av_frame_unref(pFrame);

//从队列中获取数据

pPlayBuffer = g_dbVedioList.GetData();

continue;

RELEASE_BUF:

pPlayBuffer->Clear();

g_dbPlayBuffer.ReleaseBuffer(pPlayBuffer);

}

else

{

Sleep(10);

}

//从队列中获取数据

pPlayBuffer = g_dbVedioList.GetData();

}

//释放内存

av_free(pbVedioOutBuf);

sws_freeContext(mSwsContext);

avcodec_free_context(&pCtx);

FREE:

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

//清空未显示的数据

g_dbVedioList.ClearDataList();

SDL_DestroyTexture(mSDL_Texture);

if (!m_bExit)

{

SDL_DestroyWindow(mSDL_Window);

::ShowWindow(m_hWnd, SW_SHOW);

}

//线程结束

Close();

return 0;

}

音频播放线程是使用SDL来播放音频数据:

//线程主函数

int CAudioThread::Run(void)

{

FFmpeg/

AVCodec *pCodecAudioDec;

AVCodecContext *pCodecCtxAudio;

AVPacket *pkAudio;

AVFrame *pAudioFrame;

struct SwrContext *mSwsAudioContext;

SDL_AudioSpec wantSpec;

SDL_AudioDeviceID deviceID;//设备ID, 默认设备为1

unsigned char* pbAudioBuffer = NULL;

int nAudioBufLen = 0;

///

CoInitialize(NULL);

/FFmpeg///

pCodecAudioDec = avcodec_find_decoder(AV_CODEC_ID_PCM_ALAW);

if (!pCodecAudioDec)

{

TRACE(_T("Codec not found audio codec id"));

AfxMessageBox(IDS_OPEN_DECODE_FAILED);

return 3;

}

//音频PCM解码器

pCodecCtxAudio = avcodec_alloc_context3(pCodecAudioDec);

if (!pCodecCtxAudio)

{

AfxMessageBox(IDS_NEW_MEMERY_FAILED);

TRACE(_T("Could not allocate audio codec context"));

return 4;

}

//输入的参数 这部分要和海思的一致

pCodecCtxAudio->sample_fmt = AV_SAMPLE_FMT_S16; //16位

pCodecCtxAudio->sample_rate = 8000; //频率

pCodecCtxAudio->channels = 1; //1个信道

pCodecCtxAudio->frame_size = 320; //数据大小

//打开解码器

if (avcodec_open2(pCodecCtxAudio, pCodecAudioDec, NULL) < 0)

{

TRACE(_T("Could not open codec"));

AfxMessageBox(IDS_OPEN_DECODE_FAILED);

return 5;

}

//音频输出的参数,

wantSpec.freq =8000; //和输入的频率要保持一致,否则声音会断断续续

wantSpec.format = AUDIO_S16SYS; //输出要使用系统本地格式

wantSpec.channels = av_get_channel_layout_nb_channels(AV_CH_LAYOUT_STEREO); //立体声

wantSpec.silence = 0; //0表示不静音,1表示静音

//获取声音的缓冲区大小,不用了

//int nAudioOutBufSize = av_samples_get_buffer_size(NULL, wantSpec.channels, pCodecCtxAudio->frame_size, pCodecCtxAudio->sample_fmt, 1);

//采样大小和输入保持一致

wantSpec.samples = pCodecCtxAudio->frame_size;

#ifdef _PUSH_BUFFER_

wantSpec.callback = NULL; //推送的时候,这个保持为空

wantSpec.userdata = NULL; //同上

#else

wantSpec.callback = fill_audio;//NULL;//

wantSpec.userdata = pCodecCtxAudio;

#endif

unsigned char * pbAudioOutBuf = (unsigned char *)av_malloc(MAX_AUDIO_FRAME_SIZE*2); //双声道

//SDL_OpenAudio只能打开设备ID = 1的设备,就是默认设备

deviceID = 1;

//打开扬声器

if (SDL_OpenAudio(&wantSpec, NULL) < 0)

{

USES_CONVERSION; // 声明这个宏要使用的局部变量

TRACE(_T("can not open SDL!"));

//AfxMessageBox(A2T(SDL_GetError()));

AfxMessageBox(IDS_AUDIO_SDL_INIT_FAILED);

while (m_nTheradStatus == THREAD_RUNNING)

{

//从队列中获取数据

CPlayBuffer* pPlayBuffer = g_dbAudioList.GetData();

if (pPlayBuffer != NULL)

{

g_dbPlayBuffer.ReleaseBuffer(pPlayBuffer);

}

else

{

Sleep(1);

}

}

Close();

return 6;

}

#if 0

//SDL_OpenAudioDevice 没法打开默认设备,只能打开设备id大于1的设备

SDL_AudioDeviceID deviceID;

if ((deviceID = SDL_OpenAudioDevice(NULL, 0, &wantSpec, NULL, SDL_AUDIO_ALLOW_ANY_CHANGE)) < 2)

{

CString strError;

strError.Format(_T("SDL_OpenAudioDevice with error deviceID :%u"),deviceID);

TRACE(strError);

return 6;

}

#endif

//Swr配置,

mSwsAudioContext=swr_alloc_set_opts(NULL,

AV_CH_LAYOUT_STEREO, /*out*/

AV_SAMPLE_FMT_S16, /*out*/

wantSpec.freq, /*out*/

av_get_default_channel_layout(pCodecCtxAudio->channels), /*in*/

pCodecCtxAudio->sample_fmt , /*in*/

pCodecCtxAudio->sample_rate, /*in*/

0,

NULL);

//创建需要的各种包 帧

pkAudio = av_packet_alloc();

pAudioFrame = av_frame_alloc();

//初始化

av_init_packet(pkAudio);

swr_init(mSwsAudioContext);

//开始播放

SDL_PauseAudioDevice(deviceID, 0);

int nGotFrame = -1;

CPlayBuffer * pPlayBuffer = NULL;

int nSleep = 0; //休息时间

m_dwStartTime = GetTickCount();

while (m_nTheradStatus == THREAD_RUNNING)

{

//从队列中获取数据

pPlayBuffer = g_dbAudioList.GetData();

if(pPlayBuffer != NULL)

{

//自己构造,不用释放内存了

pkAudio->data = (UCHAR*)pPlayBuffer->m_pszBuffer;

pkAudio->size = pPlayBuffer->m_nLen;

pkAudio->stream_index = 0;

nGotFrame = 0;

if (pPlayBuffer->m_dwSleepTime > 0)

{

nSleep = pPlayBuffer->m_dwTimePoint - (GetTickCount() - m_dwStartTime);

TRACE("Play audio sleep = %d\n", nSleep);

if (nSleep > 0)

{

Sleep(nSleep);

}

}

/*

avcodec_decode_audio4:被声明为已否决:

int ret = avcodec_send_packet(aCodecCtx, &pkt);

if (ret != 0){prinitf("%s/n","error");}

while( avcodec_receive_frame(aCodecCtx, &frame) == 0)

{

//读取到一帧音频或者视频

//处理解码后音视频 frame

}

*/

if (pPlayBuffer->m_nType > TYPE_ADUIO_LPCM_R)

{

if (avcodec_decode_audio4(pCodecCtxAudio, pAudioFrame, &nGotFrame, pkAudio) < 0)

{

goto RELEASE_BUF;

}

//如果有声音数据

if (nGotFrame > 0)

{

//返回值采样大小

int nRet = swr_convert(mSwsAudioContext, &pbAudioOutBuf, MAX_AUDIO_FRAME_SIZE

, (const uint8_t**)pAudioFrame->data, pAudioFrame->nb_samples);

pbAudioBuffer = pbAudioOutBuf;

nAudioBufLen = nRet * 4;

}

}

else

{

//LPCM

if (pPlayBuffer->m_nType == TYPE_ADUIO_LPCM_L)

{

nGotFrame = 1;

pbAudioBuffer = (UCHAR*)pPlayBuffer->m_pszBuffer;

nAudioBufLen = pPlayBuffer->m_nLen;

}

}

if (nGotFrame > 0)

{

#ifdef _PUSH_BUFFER_

//大小应该设置位nRet*音频信道数量*位数 ,这里之间用nRet*4代替

SDL_QueueAudio(deviceID, pbAudioBuffer, nAudioBufLen);

#else

while (audioLen > 0)

SDL_Delay(1);

audioChunk = (unsigned char*)pbAudioBuffer;

audioPos = audioChunk;

audioLen = nAudioBufLen;//2* av_get_bytes_per_sample(AV_SAMPLE_FMT_S16);//nAudioOutBufSize;//nRet;//

#endif

if (m_bSave)

{

g_dbSaveList.AddData(pPlayBuffer);

}

}

else

{

pPlayBuffer->Clear();

g_dbPlayBuffer.ReleaseBuffer(pPlayBuffer);

}

//这个时ffmpeg分配的,需要释放

av_frame_unref(pAudioFrame);

continue;

RELEASE_BUF:

pPlayBuffer->Clear();

g_dbPlayBuffer.ReleaseBuffer(pPlayBuffer);

}

else

{

Sleep(1);

}

}

//释放分配的内存

av_free(pbAudioOutBuf);

av_frame_free(&pAudioFrame);

swr_free(&mSwsAudioContext);

avcodec_free_context(&pCodecCtxAudio);

//关闭扬声器

SDL_CloseAudio();

//清空音频数据列表

g_dbAudioList.ClearDataList();

//线程结束

Close();

CoUninitialize();

return 0;

}

保存线程负责保存数据到对应文件中,这个很简单,这里就不列举代码了。

5.更换摄像头注意事项

海思平台默认提供的是使用IMX307,而项目需求GC2053摄像头,在网上找了很久没有详细介绍怎么修改的,只是提到在rcS中修改启动参数。下面介绍一下需要修改的地方:

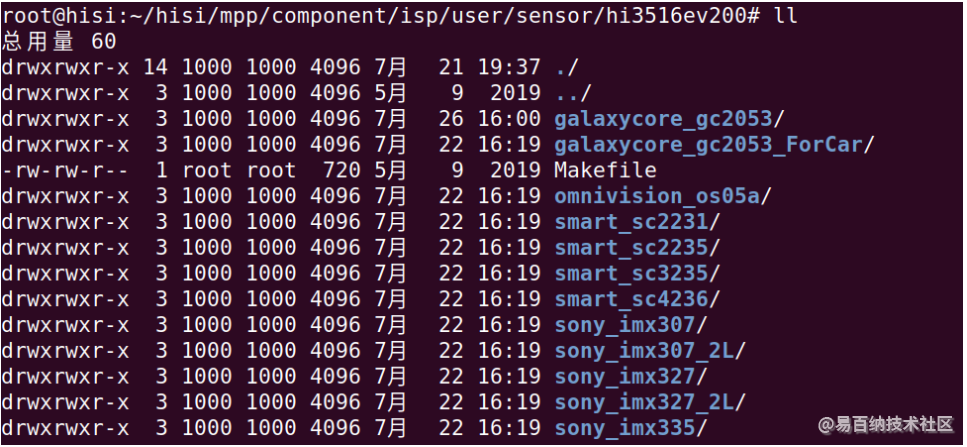

海思3516EV200支持的摄像头在mpp/component/isp/user/sensor/hi3516ev200目录下:

如果摄像头的实际启动顺序和海思提供的不符合,可以修改对应摄像头目录下的_sensor_ctl.c文件中的void _linear_1080p30_init(VI_PIPE ViPipe)函数。

修改后,在isp目录下执行make命令即可生成对应的链接库libsns_*.a和so文件,存放在mpp/lib/目录下。这个库文件在编译rtsp应用时需要的,所以还得在rtsp应用的makefile中修改:-DSENSOR0_TYPE=GALAXYCORE_GC2053_MIPI_2M_30FPS_10BIT

并将库文件移到应用程序对应的lib目录下。

最后在rcS文件中修改启动参数:./load3516ev200 -i -sensor gc2053

6.创建flash生产文件

Flash生成文件时提供厂商进行生产时使用的文件。因为结构很简单,就是从0位置开始写入uboot文件,在0x80000处写入配置信息,在0xC0000处开始写入内核文件,在0x3C0000处写入文件系统。

这里写了一个例子供大家参考:

int main(int argc, char **argv)

{

char * pszBootFile =NULL;

char * pszFSFile = NULL;

char * pszKernelFile = NULL;

char * pszDestFile = NULL;

char pszBuffer[BUF_SIZE+1];

unsigned long lBootLen = 0;

unsigned long lFSLen = 0;

unsigned long lKernelLen = 0;

unsigned long lDestLen = 0;

unsigned int nReadLen = 0;

FILE *fpBoot = NULL;

FILE *fpFS = NULL;

FILE *fpDest = NULL;

FILE *fpKernel = NULL;

FILE *fpConfig = NULL;

long lFFLen = 0;

bzero(pszBuffer, BUF_SIZE+1);

if (argc < 5)

{

usage(argv[0]);

return 1;

}

pszBootFile = argv[1];

pszKernelFile = argv[2];

pszFSFile = argv[3];

pszDestFile = argv[4];

if ((fpBoot = fopen(pszBootFile, "rb")) == NULL)

{

printf("%s file not find!\n", pszBootFile);

goto err;

}

if ((fpFS = fopen(pszFSFile, "rb")) == NULL)

{

printf("%s file not find!\n", pszFSFile);

goto err;

}

if ((fpKernel = fopen(pszKernelFile, "rb")) == NULL)

{

printf("%s file not find!\n", pszKernelFile);

goto err;

}

if ((fpDest = fopen(pszDestFile, "wb")) == NULL)

{

printf("Cann't create %s file!\n", pszDestFile);

goto err;

}

if ((fpConfig = fopen("read.bin", "rb")) == NULL)

{

printf("Cann't create read.bin file!\n");

goto err;

}

fseek(fpBoot, 0, SEEK_END);

lBootLen = ftell(fpBoot);

fseek(fpBoot, 0, SEEK_SET);

printf("The Boot File size = 0x%lX\n", lBootLen);

fseek(fpKernel, 0, SEEK_END);

lKernelLen = ftell(fpKernel);

fseek(fpKernel, 0, SEEK_SET);

printf("The kernel File size = 0x%lX\n", lKernelLen);

fseek(fpFS, 0, SEEK_END);

lFSLen = ftell(fpFS);

fseek(fpFS, 0, SEEK_SET);

printf("The FS File size = 0x%lX\n", lFSLen);

printf("Write Boot file to Dest file\n");

while(lDestLen < lBootLen)

{

bzero(pszBuffer, BUF_SIZE);

nReadLen = lBootLen - lDestLen;

nReadLen = nReadLen > BUF_SIZE ? BUF_SIZE : nReadLen;

nReadLen = fread(pszBuffer, 1, nReadLen, fpBoot);

if(nReadLen > 0)

{

if(fwrite(pszBuffer, 1, nReadLen, fpDest) != nReadLen)

{

printf("Cann't write BOOT data to file all byte,exit!\n");

goto err;

}

lDestLen += nReadLen;

}

}

//fill 0xff;

lFFLen = 0x80000 - lDestLen;

if(lFFLen > 0)

{

fillFF(fpDest, lFFLen);

}

lDestLen += lFFLen;

lFFLen = 0xC0000 - 0x80000;

fseek(fpConfig, 0x80000, SEEK_SET);

printf("Write config to Dest file\n");

while(lFFLen > 0)

{

bzero(pszBuffer, BUF_SIZE);

nReadLen = lFFLen > BUF_SIZE ? BUF_SIZE : lFFLen;

nReadLen = fread(pszBuffer, 1, nReadLen, fpConfig);

if(nReadLen > 0)

{

if(fwrite(pszBuffer, 1, nReadLen, fpDest) != nReadLen)

{

printf("Cann't write Config data to file all byte,exit!\n");

goto err;

}

lDestLen += nReadLen;

lFFLen -= nReadLen;

}

else

{

printf("read len = %d\n", nReadLen);

}

}

printf("Write kernel file to Dest file\n");

while(lKernelLen > 0)

{

bzero(pszBuffer, BUF_SIZE);

nReadLen = lKernelLen > BUF_SIZE ? BUF_SIZE : lKernelLen;

nReadLen = fread(pszBuffer, 1, nReadLen, fpKernel);

if(nReadLen > 0)

{

if(fwrite(pszBuffer, 1, nReadLen, fpDest) != nReadLen)

{

printf("Cann't write FS data to file all byte,exit!\n");

goto err;

}

lDestLen += nReadLen;

lKernelLen -= nReadLen;

}

}

//fill 0xff;

lFFLen = 0x3C0000 - lDestLen;

if(lFFLen > 0)

{

fillFF(fpDest, lFFLen);

}

lDestLen += lFFLen;

printf("Write FS file to Dest file\n");

while(lFSLen > 0)

{

bzero(pszBuffer, BUF_SIZE);

nReadLen = lFSLen > BUF_SIZE ? BUF_SIZE : lFSLen;

nReadLen = fread(pszBuffer, 1, nReadLen, fpFS);

if(nReadLen > 0)

{

if(fwrite(pszBuffer, 1, nReadLen, fpDest) != nReadLen)

{

printf("Cann't write FS data to file all byte,exit!\n");

goto err;

}

lDestLen += nReadLen;

lFSLen -= nReadLen;

}

}

printf("All date write to Dest file!\nTHe Dest file size is:0x%lX\n", lDestLen);

err:

if(fpBoot != NULL)

{

fclose(fpBoot);

}

if(fpKernel != NULL)

{

fclose(fpKernel);

}

if(fpFS != NULL)

{

fclose(fpFS);

}

if(fpDest != NULL)

{

fclose(fpDest);

}

if(fpConfig != NULL)

{

fclose(fpConfig);

}

return 0;

}

- 分享

- 举报

暂无数据

暂无数据-

浏览量:7871次2021-07-02 15:09:39

-

浏览量:2904次2024-02-22 15:52:02

-

浏览量:11604次2020-09-06 23:18:26

-

浏览量:3827次2020-08-04 17:37:01

-

浏览量:4398次2020-07-27 15:12:15

-

2018-06-18 22:47:22

-

2023-06-12 14:35:32

-

浏览量:12598次2022-06-10 21:26:04

-

2021-07-13 14:13:08

-

2021-07-13 16:46:11

-

浏览量:4218次2020-07-30 11:57:30

-

浏览量:1310次2023-10-30 15:06:52

-

2021-05-07 17:06:48

-

浏览量:3070次2020-08-26 17:38:24

-

浏览量:3528次2020-07-27 15:26:51

-

浏览量:17109次2020-09-12 15:07:52

-

浏览量:5005次2021-07-08 16:04:02

-

浏览量:3211次2020-08-27 19:30:09

-

浏览量:3947次2020-08-03 19:28:14

-

广告/SPAM

-

恶意灌水

-

违规内容

-

文不对题

-

重复发帖

ladoo

微信支付

微信支付举报类型

- 内容涉黄/赌/毒

- 内容侵权/抄袭

- 政治相关

- 涉嫌广告

- 侮辱谩骂

- 其他

详细说明

微信扫码分享

微信扫码分享 QQ好友

QQ好友