技术专栏

基于mmpose的动作分类

基于mmpose的动作分类

说明:本项目使用了检测+关键点+跟踪+分类

检测模型

本项目使用yolov7的模型进行人体的检测

克隆项目

git clone https://github.com/WongKinYiu/yolov7.git

下载模型:

https://github.com/WongKinYiu/yolov7/releases/download/v0.1/yolov7.pt

在文件路径输入cmd进入终端然后创建环境,安装依赖

conda create -n 环境名 python=X.X

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

该命令可以下载快一点,使用的国内源

测试环境,如果有检测结果,说明环境安装完成

python detect.py --weights weights/yolov7.pt --source inference/images

封装

创建新的项目PoseAndSort

将v7的utils和models文件夹复制到新的项目,然后新建一个pose.py,对检测模型进行封装

class MyDetect:

def __init__(self, weights, device_name, img_size=640):

# print('detectapi __init__')

self.opt = simulation_opt(weights=weights, img_size=img_size)

weights, imgsz = self.opt.weights, self.opt.img_size

# Initialize

set_logging()

if device_name != "cuda":

self.device = select_device("0")

else:

self.device = select_device(device_name)

self.half = self.device.type != 'cpu' # half precision only supported on CUDA

# model = torch.load(weights,

# map_location=self.device) # load FP32 model

# self.model = model['model']

self.model = attempt_load(weights, map_location=self.device) # load FP32 model

self.stride = int(self.model.stride.max()) # model stride

self.imgsz = check_img_size(imgsz, s=self.stride) # check img_size

if self.half:

self.model.half() # to FP16

self.classify = False

# read names and colors

self.names = self.model.module.names if hasattr(self.model, 'module') else self.model.names

self.colors = [[random.randint(0, 255) for _ in range(3)] for _ in self.names]

def run(self, source): # 使用时,调用这个函数

检测代码省略......

返回nms后的scale_coords检测结果

mmpose-关键点

说明:因为特殊要求,需要脚部的关键点,所以没有使用v7的关键点模型

模型使用的是 人体 2d 关键点 (17 Keypoints),RTMPose-l

| Config | Input Size | AP (COCO) | Params(M) | FLOPS(G) | ORT-Latency(ms) (i7-11700) | TRT-FP16-Latency(ms) (GTX 1660Ti) | ncnn-FP16-Latency(ms) (Snapdragon 865) |

|---|---|---|---|---|---|---|---|

| RTMPose-l | 256x192 | 76.5 | 4.16 | 76.5 | 27.66 | 4.16 | 18.85 |

安装

创建一个 conda 虚拟环境并激活它。

conda create --name openmmlab python=3.8 -y

conda activate openmmlab

pip install -U openmim

mim install mmengine

mim install "mmcv>=2.0.1"

模型推理

# RTMPose

python tools/deploy.py \

configs/mmpose/pose-detection_simcc_onnxruntime_dynamic.py \

{RTMPOSE_PROJECT}/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py \

../rtmpose_m/rtmpose_m.pth \

demo/resources/human-pose.jpg \

--work-dir mmdeploy_models/mmpose/sdk \

--device cpu \

--show \

--dump-info # 导出 sdk info

导出onnx

# 前往 mmdeploy 目录

cd ${PATH_TO_MMDEPLOY}

# 转换 RTMPose

python tools/deploy.py \

configs/mmpose/pose-detection_simcc_onnxruntime_dynamic.py \

{RTMPOSE_PROJECT}/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py \

../rtmpose_m/rtmpose_m.pth \

demo/resources/human-pose.jpg \

--work-dir mmdeploy_models/mmpose/ort \

--device cpu \

--show

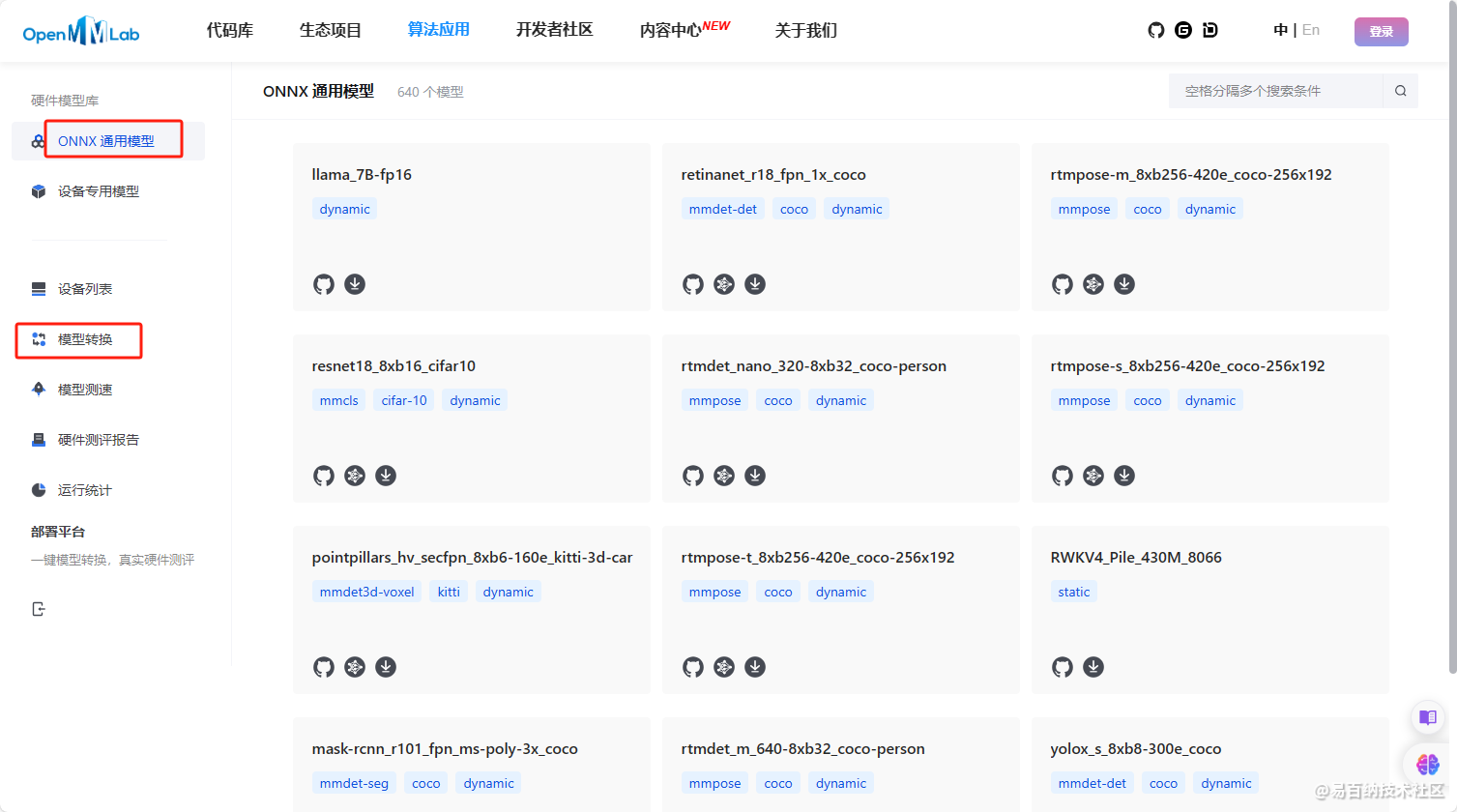

open-mmlab 提供了在线的模型转换和通用的onnxm模型

封装

class MyRtmpose():

def __init__(self, pose_model_path, device_name):

self.model = PoseDetector(

model_path=pose_model_path, device_name=device_name)

def run(self, images, bboxes):

result = self.model(images, bboxes)

return result

说明需要安装mmdeploy_runtime

跟踪模型

本项目使用了传统的sort和Deepsort

sort

class Sort(object):

def __init__(self, max_age=1, min_hits=3, iou_threshold=0.3):

"""

Parameters for SORT

"""

self.max_age = max_age

self.min_hits = min_hits

self.iou_threshold = iou_threshold

self.trackers = []

self.frame_count = 0

self.color_list = []

def getTrackers(self, ):

return self.trackers

def update(self, dets=np.empty((0, 6)), unique_color=False):

"""

Parameters:

'dets' - a numpy array of detection in the format [[x1, y1, x2, y2, score], [x1,y1,x2,y2,score],...]

Ensure to call this method even frame has no detections. (pass np.empty((0,5)))

Returns a similar array, where the last column is object ID (replacing confidence score)

NOTE: The number of objects returned may differ from the number of objects provided.

"""

self.frame_count += 1

# Get predicted locations from existing trackers

trks = np.zeros((len(self.trackers), 6))

to_del = []

ret = []

for t, trk in enumerate(trks):

pos = self.trackers[t].predict()[0]

trk[:] = [pos[0], pos[1], pos[2], pos[3], 0, 0]

if np.any(np.isnan(pos)):

to_del.append(t)

trks = np.ma.compress_rows(np.ma.masked_invalid(trks))

for t in reversed(to_del):

self.trackers.pop(t)

if unique_color:

self.color_list.pop(t)

matched, unmatched_dets, unmatched_trks = associate_detections_to_trackers(dets, trks, self.iou_threshold)

# Update matched trackers with assigned detections

for m in matched:

self.trackers[m[1]].update(dets[m[0], :])

# Create and initialize new trackers for unmatched detections

for i in unmatched_dets:

trk = KalmanBoxTracker(np.hstack((dets[i, :], np.array([0]))))

self.trackers.append(trk)

if unique_color:

self.color_list.append(get_color())

i = len(self.trackers)

for trk in reversed(self.trackers):

d = trk.get_state()[0]

if (trk.time_since_update < 1) and (trk.hit_streak >= self.min_hits or self.frame_count <= self.min_hits):

ret.append(np.concatenate((d, [trk.id + 1])).reshape(1,

-1)) # +1'd because MOT benchmark requires positive value

i -= 1

# remove dead tracklet

if (trk.time_since_update > self.max_age):

self.trackers.pop(i)

if unique_color:

self.color_list.pop(i)

if (len(ret) > 0):

return np.concatenate(ret)

return np.empty((0, 6))

deepsort

class DeepSort(object):

def __init__(self, model_path, max_dist=0.2, min_confidence=0.3, nms_max_overlap=1.0, max_iou_distance=0.7, max_age=70, n_init=3, nn_budget=100, use_cuda=True):

self.min_confidence = min_confidence

self.nms_max_overlap = nms_max_overlap

self.extractor = Extractor(model_path, use_cuda=use_cuda)

max_cosine_distance = max_dist

nn_budget = 100

metric = NearestNeighborDistanceMetric("cosine", max_cosine_distance, nn_budget)

self.tracker = Tracker(metric, max_iou_distance=max_iou_distance, max_age=max_age, n_init=n_init)

def update(self, bbox_xywh, confidences, ori_img):

self.height, self.width = ori_img.shape[:2]

# generate detections

features = self._get_features(bbox_xywh, ori_img)

bbox_tlwh = self._xywh_to_tlwh(bbox_xywh)

detections = [Detection(bbox_tlwh[i], conf, features[i]) for i,conf in enumerate(confidences) if conf>self.min_confidence]

# run on non-maximum supression

boxes = np.array([d.tlwh for d in detections])

scores = np.array([d.confidence for d in detections])

indices = non_max_suppression(boxes, self.nms_max_overlap, scores)

detections = [detections[i] for i in indices]

# update tracker

self.tracker.predict()

self.tracker.update(detections)

# output bbox identities

outputs = []

for track in self.tracker.tracks:

if not track.is_confirmed() or track.time_since_update > 1:

continue

box = track.to_tlwh()

x1,y1,x2,y2 = self._tlwh_to_xyxy(box)

track_id = track.track_id

outputs.append(np.array([x1,y1,x2,y2,track_id], dtype=np.int))

if len(outputs) > 0:

outputs = np.stack(outputs,axis=0)

return outputs

"""

TODO:

Convert bbox from xc_yc_w_h to xtl_ytl_w_h

Thanks JieChen91@github.com for reporting this bug!

"""

@staticmethod

def _xywh_to_tlwh(bbox_xywh):

if isinstance(bbox_xywh, np.ndarray):

bbox_tlwh = bbox_xywh.copy()

elif isinstance(bbox_xywh, torch.Tensor):

bbox_tlwh = bbox_xywh.clone()

bbox_tlwh[:,0] = bbox_xywh[:,0] - bbox_xywh[:,2]/2.

bbox_tlwh[:,1] = bbox_xywh[:,1] - bbox_xywh[:,3]/2.

return bbox_tlwh

def _xywh_to_xyxy(self, bbox_xywh):

x,y,w,h = bbox_xywh

x1 = max(int(x-w/2),0)

x2 = min(int(x+w/2),self.width-1)

y1 = max(int(y-h/2),0)

y2 = min(int(y+h/2),self.height-1)

return x1,y1,x2,y2

def _tlwh_to_xyxy(self, bbox_tlwh):

"""

TODO:

Convert bbox from xtl_ytl_w_h to xc_yc_w_h

Thanks JieChen91@github.com for reporting this bug!

"""

x,y,w,h = bbox_tlwh

x1 = max(int(x),0)

x2 = min(int(x+w),self.width-1)

y1 = max(int(y),0)

y2 = min(int(y+h),self.height-1)

return x1,y1,x2,y2

def _xyxy_to_tlwh(self, bbox_xyxy):

x1,y1,x2,y2 = bbox_xyxy

t = x1

l = y1

w = int(x2-x1)

h = int(y2-y1)

return t,l,w,h

def _get_features(self, bbox_xywh, ori_img):

im_crops = []

for box in bbox_xywh:

x1,y1,x2,y2 = self._xywh_to_xyxy(box)

im = ori_img[y1:y2,x1:x2]

im_crops.append(im)

if im_crops:

features = self.extractor(im_crops)

else:

features = np.array([])

return features

分类

因数据原因使用机器学习模型进行动作的分类

根据获取的26个关键点数据进行归一化

随机森林模型

X_train, X_test, y_train, y_test = train_test_split(all_embedding, all_target, test_size=0.25, shuffle=True,

stratify=all_target)

rfc = RandomForestClassifier(random_state=0, n_estimators=59, max_depth=13,min_samples_leaf=1,min_samples_split=24)

rfc = rfc.fit(X_train, y_train)

score_r = rfc.score(X_test, y_test)

pre = rfc.predict(X_test) # 预测 类别

print("测试集准确率", accuracy_score(y_test, pre))

print("测试集召回率", recall_score(y_test, pre, average="weighted"))

print("测试集精确率", precision_score(y_test, pre, average="weighted"))

print("测试集f1", f1_score(y_test, pre, average="weighted"))

决策树

# 决策树分类器

clf = DecisionTreeClassifier(random_state=0)

clf = clf.fit(X_train, y_train)

score_c = clf.score(X_test, y_test)

pre = clf.predict(X_test) # 预测 类别

print("测试集准确率", accuracy_score(y_test, pre))

print("测试集召回率", recall_score(y_test, pre, average="weighted"))

print("测试集精确率", precision_score(y_test, pre, average="weighted"))

print("测试集f1", f1_score(y_test, pre, average="weighted"))

SVC

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(data, labels, test_size=0.2, random_state=0)

# 训练模型

model = SVC()

model.fit(X_train, y_train)

# 测试模型

accuracy = model.score(X_test, y_test)

print("准确率:", accuracy)

KNN分类器

X_train, X_test, y_train, y_test = train_test_split(all_embedding, all_target, test_size=0.25, shuffle=True,

stratify=all_target)

# 创建KNN分类器

knn = KNeighborsClassifier(n_neighbors=18)

# 训练分类器

knn.fit(X_train, y_train)

# 对测试集进行预测

y_pred = knn.predict(X_test)

# 计算准确率

accuracy = accuracy_score(y_test, y_pred)

print("准确率:", accuracy)

使用神经网络

# 定义神经网络模型

class ComplexNet(nn.Module):

def __init__(self):

super(ComplexNet, self).__init__()

self.fc1 = nn.Linear(78, 936)

self.fc2 = nn.Linear(936, 780)

self.fc3 = nn.Linear(780, 624)

self.fc4 = nn.Linear(624, 468)

# self.fc5 = nn.Linear(468, 312)

self.fc5 = nn.Linear(468, 5)

self.relu = nn.Sigmoid()

# self.relu = nn.ReLU()

self.dropout = nn.Dropout(0.2)

#

def forward(self, x):

x = self.fc1(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc2(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc3(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc4(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc5(x)

# x = self.relu(x)

# x = self.dropout(x)

# x = self.fc6(x)

return x

声明:本文内容由易百纳平台入驻作者撰写,文章观点仅代表作者本人,不代表易百纳立场。如有内容侵权或者其他问题,请联系本站进行删除。

红包

2

2

评论

打赏

- 分享

- 举报

评论

0个

手气红包

暂无数据

暂无数据相关专栏

-

浏览量:285次2023-08-03 15:28:32

-

浏览量:1059次2023-07-05 10:15:58

-

浏览量:2725次2024-01-18 14:56:15

-

浏览量:353次2023-07-24 11:00:24

-

浏览量:4667次2021-06-30 11:34:00

-

浏览量:1072次2023-07-05 10:16:00

-

浏览量:5411次2021-09-15 13:48:53

-

浏览量:1698次2024-01-18 17:18:51

-

浏览量:1217次2023-09-27 15:33:27

-

浏览量:2253次2020-05-19 17:21:15

-

浏览量:3479次2021-05-13 10:37:02

-

浏览量:1131次2023-09-27 15:48:35

-

浏览量:4022次2018-01-16 12:27:50

-

浏览量:2269次2019-07-01 10:40:05

-

浏览量:7289次2020-12-20 19:38:14

-

浏览量:4452次2020-10-21 10:01:14

-

浏览量:1996次2018-04-20 20:04:28

-

浏览量:7328次2020-12-24 23:03:55

-

浏览量:966次2023-03-09 09:14:06

置顶时间设置

结束时间

删除原因

-

广告/SPAM

-

恶意灌水

-

违规内容

-

文不对题

-

重复发帖

打赏作者

shui

您的支持将鼓励我继续创作!

打赏金额:

¥1

¥5

¥10

¥50

¥100

支付方式:

微信支付

微信支付

举报反馈

举报类型

- 内容涉黄/赌/毒

- 内容侵权/抄袭

- 政治相关

- 涉嫌广告

- 侮辱谩骂

- 其他

详细说明

审核成功

发布时间设置

发布时间:

请选择发布时间设置

是否关联周任务-专栏模块

审核失败

失败原因

请选择失败原因

备注

请输入备注

微信扫码分享

微信扫码分享 QQ好友

QQ好友