live555拉流并利用ffmpeg解码

“ 最近有一个需求,需要利用RTSP协议来进行传送视频流,还需要实现拖拉进度条,以及快进等功能。一开始是准备直接用FFmpeg来进行实现的,但是FFmpeg对RTSP协议等支持得不是很好,留给开发者进行开发的空间很小(可能是自己没查到相关的资料),但是用FFmpeg来进行解码倒非常成熟。由于live555实现了RTSP协议,因此萌发了利用live555来对视频流进行传输,用FFmpeg对流进行编解码。但查了很多资料,看了一些博客,发现这个思路是完全没有问题的,主要卡在FFmpeg解码这一步,很多博客只给了思路,并没有能直接运行的代码。我折腾了好久,总算是能用FFmpeg把rtp视频流解码为YUV,特此记录一下,方便大家做后面的流媒体视频播放器开发。”

IDE环境:VS2017(2019也可以)

FFmpeg:最新的x64版本(share版本就行)

live555:官网最新的版本(截止2021-6-1日)

环境配置

1. live555编译环境配置

Windows10系统中用VS2019编译live555_Rustone的博客-CSDN博客_live555 vs2019 CSDN的这篇博客已经写得非常详实了,我这里就不作过多的赘述。但要注意一点,我在配置时,并没有把BasicUsageEnvironment、UsageEnvironment、groupsock、liveMedia属性页的【配置属性】--【常规】--【输出目录】改为

$(SolutionDir)$(Configuration)\lib\- 1

- 2

而是使用了默认值:

$(SolutionDir)$(Configuration)\- 1

- 2

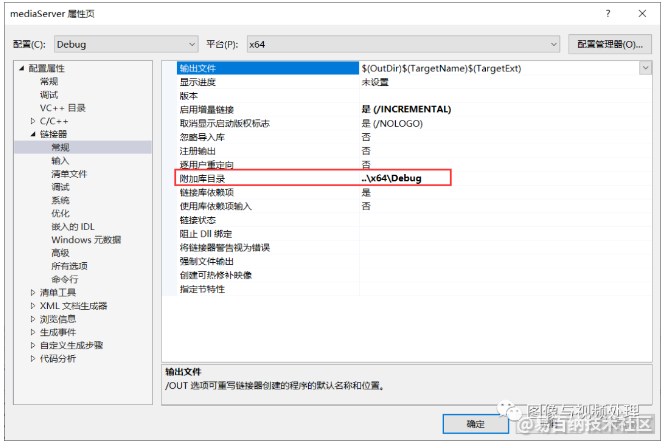

这样做了之后,,还需要额外多做一步,那就是把【配置属性】--【链接器】--【常规】附加库目录改为..\x64\Debug,如果是配置的32位,则填写..\Debug即可。

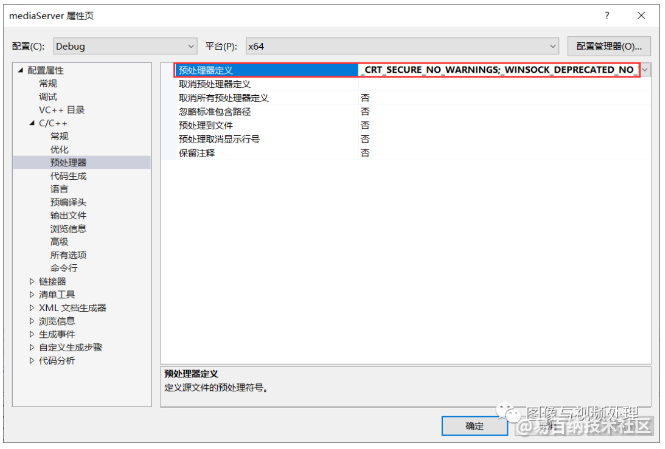

如果编译过程出现错误,在【配置属性】--【C/C++】--【常规】---【预处理器】中预处理器定义中填入如下宏就行和sdl检查:

_CRT_SECURE_NO_WARNINGS

_WINSOCK_DEPRECATED_NO_WARNINGS

NO_OPENSSL

NO_GETIFADDRS- 1

- 2

- 3

- 4

2. FFmpeg环境配置

参考博客Vusial Studio配置FFmpeg和SDL开发环境

live555传输&FFmpeg解码

这里主要是基于live555的testRtspClient修改而来的。最重要的就是对DummySink类进行修改。DummySink是继承的MediaSink,主要在afterGettingFrame函数进行操作,该函数可以拿到每一帧的数据,但是FFmpeg解码的时候需要sps和pps的相关数据,因此需要在原始码流中解析出sps和pps,而且原始的码流每一个nalu之间还需要我们手动添加start_code(h264的start_code是{0x0,0x0,0x0,0x1}),这样才能被FFmpeg正常解码。

1. 创建项目

项目名字为MyRtspClient,是一个空白的控制台项目,具体创建的方法请参照上面的1.1,同时配置好FFmpeg的环境,参照1.2即可。其余的设置和mediaServer是一样的。然后把live555原始文件夹下的testProgs文件夹中的testRtspClient.cpp代码导入我们新建的项目。同时添加一个名为DummySink的类和头文件,把testRtspClient.cpp中的关于DummySink类的定义全部剪切到DummySink.h中,把DummySink的实现全部剪切到DummySink.cpp中。主要是添加了saveFrame函数用于存储YUV文件,openDecoder函数用于指定解码器,注意openDecoder中pCodecCtx和pCodec一定要定义为DummySink类的变量,不能定义为decodeAndSaveFrame中的局部变量,之前在这里被坑了很多次,这是因为在解码的过程中只需要一开始只指定一次pps和sps就行,并不是每一帧都进行重新解码pps和sps。

DummySink.h

#pragma once

#include "liveMedia.hh"

extern "C" {

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libavutil/pixfmt.h"

#include "libswscale/swscale.h"

}

#define SAVE_YUV 0

class DummySink : public MediaSink {

public:

static DummySink* createNew(UsageEnvironment& env,

MediaSubsession& subsession, // identifies the kind of data that's being received

char const* streamId = NULL); // identifies the stream itself (optional)

private:

DummySink(UsageEnvironment& env, MediaSubsession& subsession, char const* streamId);

// called only by "createNew()"

virtual ~DummySink();

static void afterGettingFrame(void* clientData, unsigned frameSize,

unsigned numTruncatedBytes,

struct timeval presentationTime,

unsigned durationInMicroseconds);

void afterGettingFrame(unsigned frameSize, unsigned numTruncatedBytes,

struct timeval presentationTime, unsigned durationInMicroseconds);

private:

// redefined virtual functions:

virtual Boolean continuePlaying();

void saveFrame(AVFrame* pFrameYUV, int width, int height); // 用于保存解码后的YUV

int openDecoder(); //用于分配初始值,以及指定解码器

void decodeAndSaveFrame(int nLen); // 用于把收到fReciveBuffer解码

private:

u_int8_t* fReceiveBuffer;

MediaSubsession& fSubsession;

char* fStreamId;

private:

AVCodecContext* pCodecCtx;

AVCodec* pCodec;

FILE* fp_yuv;

};

// 下面的结构体是为了便于组成AVPacket

typedef struct {

unsigned char* frameBuf;

int frameBufSize;

}FrameUnit;- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

DummySink.cpp

#include <iostream>

#include "DummySink.h"

// Even though we're not going to be doing anything with the incoming data, we still need to receive it.

// Define the size of the buffer that we'll use:

#define DUMMY_SINK_RECEIVE_BUFFER_SIZE 100000

DummySink* DummySink::createNew(UsageEnvironment& env, MediaSubsession& subsession, char const* streamId) {

return new DummySink(env, subsession, streamId);

}

DummySink::DummySink(UsageEnvironment& env, MediaSubsession& subsession, char const* streamId)

: MediaSink(env),

fSubsession(subsession) {

fStreamId = strDup(streamId);

fReceiveBuffer = new u_int8_t[DUMMY_SINK_RECEIVE_BUFFER_SIZE];

openDecoder();

fp_yuv = fopen("decode_frame.yuv", "wb+");

}

DummySink::~DummySink() {

delete[] fReceiveBuffer;

delete[] fStreamId;

fclose(fp_yuv);

}

void DummySink::afterGettingFrame(void* clientData, unsigned frameSize, unsigned numTruncatedBytes,

struct timeval presentationTime, unsigned durationInMicroseconds) {

DummySink* sink = (DummySink*)clientData;

sink->afterGettingFrame(frameSize, numTruncatedBytes, presentationTime, durationInMicroseconds);

}

// If you don't want to see debugging output for each received frame, then comment out the following line:

#define DEBUG_PRINT_EACH_RECEIVED_FRAME 1

void DummySink::afterGettingFrame(unsigned frameSize, unsigned numTruncatedBytes,

struct timeval presentationTime, unsigned /*durationInMicroseconds*/) {

// We've just received a frame of data. (Optionally) print out information about it:

#ifdef DEBUG_PRINT_EACH_RECEIVED_FRAME

if (fStreamId != NULL) envir() << "Stream \"" << fStreamId << "\"; ";

envir() << fSubsession.mediumName() << "/" << fSubsession.codecName() << ":\tReceived " << frameSize << " bytes";

if (numTruncatedBytes > 0) envir() << " (with " << numTruncatedBytes << " bytes truncated)";

char uSecsStr[6 + 1]; // used to output the 'microseconds' part of the presentation time

sprintf(uSecsStr, "%06u", (unsigned)presentationTime.tv_usec);

envir() << ".\tPresentation time: " << (int)presentationTime.tv_sec << "." << uSecsStr;

if (fSubsession.rtpSource() != NULL && !fSubsession.rtpSource()->hasBeenSynchronizedUsingRTCP()) {

envir() << "!"; // mark the debugging output to indicate that this presentation time is not RTCP-synchronized

}

#ifdef DEBUG_PRINT_NPT

envir() << "\tNPT: " << fSubsession.getNormalPlayTime(presentationTime);

#endif

envir() << "\n";

#endif

decodeAndSaveFrame(frameSize);

// Then continue, to request the next frame of data:

continuePlaying();

}

Boolean DummySink::continuePlaying() {

if (fSource == NULL) return False; // sanity check (should not happen)

// Request the next frame of data from our input source. "afterGettingFrame()" will get called later, when it arrives:

fSource->getNextFrame(fReceiveBuffer, DUMMY_SINK_RECEIVE_BUFFER_SIZE,

afterGettingFrame, this,

onSourceClosure, this);

return True;

}

void DummySink::saveFrame(AVFrame* pFrameYUV, int width, int height)

{

int y_size = width * height;

fwrite(pFrameYUV->data[0], 1, y_size, fp_yuv); //Y

fwrite(pFrameYUV->data[1], 1, y_size / 4, fp_yuv); //U

fwrite(pFrameYUV->data[2], 1, y_size / 4, fp_yuv); //V

}

int DummySink::openDecoder()

{

pCodec = avcodec_find_decoder(AV_CODEC_ID_H264);

if (!pCodec)

{

std::cout << "Can't find decoder\n";

return -1;

}

pCodecCtx = avcodec_alloc_context3(pCodec);

if (!pCodecCtx)

{

std::cout << "Can't allocate decoder context\n";

return -1;

}

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0)

{

std::cout << "Can't open decoder\n";

return -1;

}

return 0;

}

void DummySink::decodeAndSaveFrame(int nLen)

{

char *pbuf = (char *)fReceiveBuffer;

//每一帧之间的startcode

char start_code[4] = {0, 0, 0, 1};

//处理解码需要的sps 和pps

if (!strcmp(fSubsession.mediumName(), "video"))

{

//判断AVCodecContext->extradata 是否有数据

if (pCodecCtx->extradata == NULL)

{

unsigned int SPropRecords = -1;

SPropRecord *p_record = parseSPropParameterSets(fSubsession.fmtp_spropparametersets(), SPropRecords);

//sps pps 以数组的形式保存SPropRecord中

SPropRecord &sps = p_record[0];

SPropRecord &pps = p_record[1];

int totalSize = 0;

unsigned char* extraData = NULL;

// 8是由于添加了两次start_code,一共8字节

// 0x0000001+sps+0x00000001+pps+0x00000001+NALU1+0x00000001+NALU2

totalSize = 8 + sps.sPropLength + pps.sPropLength;

// 在每个sps 和pps 之前加上startcode

extraData = (unsigned char*)realloc(extraData, totalSize);

memcpy(extraData, start_code, 4);

memcpy(extraData + 4, sps.sPropBytes, sps.sPropLength);

memcpy(extraData + 4 + sps.sPropLength, start_code, 4);

memcpy(extraData + 4 + sps.sPropLength + 4, pps.sPropBytes, pps.sPropLength);

//将 sps 和pps 的数据给ffmpeg的h264解码器上下文

pCodecCtx->extradata_size = totalSize;

pCodecCtx->extradata = extraData;

}

//下面的步骤和保存h264一样,只是在保存文件的地方修改成解码

if (!fp_yuv)

{

throw("fp_yuv pointer is NULL!");

}

if (fp_yuv) {

FrameUnit *frameUnit = (FrameUnit*)malloc(sizeof(frameUnit));

AVFrame *pFrameYUV = av_frame_alloc();

AVFrame *pFrame = av_frame_alloc();

AVPacket *avpkt = av_packet_alloc();

//在每一帧之间加上start code

frameUnit->frameBufSize = nLen + 4;

frameUnit->frameBuf = (unsigned char *)malloc(frameUnit->frameBufSize);

memcpy(frameUnit->frameBuf, start_code, 4);

memcpy(frameUnit->frameBuf + 4, pbuf, nLen);

int got_picture = 0;

//av_init_packet(avpkt);

//将每一帧赋值给AVPacket

avpkt->data = frameUnit->frameBuf;

avpkt->size = frameUnit->frameBufSize;

if (avpkt->size > 0)

{

//解码一帧,成功返回got_picture 1 ,解码数据在pFrameFormat

int ret = avcodec_decode_video2(pCodecCtx, pFrame, &got_picture, avpkt);

if (ret < 0) {

envir() << "Decode :Error.\n";

}

}

//解码成功,转化为 yuv

if (got_picture)

{

//下面的步骤,将pFrameFormat转为pFrameYUV

uint8_t* out_buffer = (uint8_t *)av_malloc(avpicture_get_size(AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height));

avpicture_fill((AVPicture *)pFrameYUV, out_buffer, AV_PIX_FMT_YUV420P, pCodecCtx->width, pCodecCtx->height);

struct SwsContext* img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height

, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height

, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

sws_scale(img_convert_ctx, (const unsigned char* const*)pFrame->data, pFrame->linesize, 0

, pCodecCtx->height, pFrameYUV->data, pFrameYUV->linesize);

//输出出YUV数据 保存在file.yuv

saveFrame(pFrameYUV, pCodecCtx->height, pCodecCtx->width);

}

//释放

av_frame_free(&pFrameYUV);

av_frame_free(&pFrame);

av_free_packet(avpkt);

}

}

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

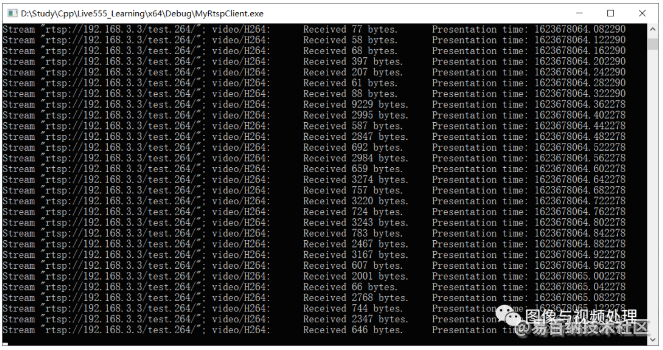

2. 运行结果

我是利用的mediaServer来搭建的RTSP服务器,为了测试简单也可以直接用VLC来搭建RTSP服务器。 具体方百度一下就行。

测试结果:

解码的控制台输出

解码后保存的YUV文件

结语

音视频开发是真的不容易,参考的资料实在太少了,稍微有点价值的参考资料动辄就要收费买网课啥的,几大百就没了。为了实现这个简单的demo,我都被迫买了一个讲live555的网课,结果发现那视频有点坑,不咋讲代码,我最大的收获就是学会了如何编译live555,换句话说这篇文章值100元╮(╯▽╰)╭。

- 分享

- 举报

-

dmmonstr 2024-09-12 14:12:04回复 举报学到了

dmmonstr 2024-09-12 14:12:04回复 举报学到了 -

david 2023-10-13 09:16:225.00元回复 举报就是live555 效率不高

david 2023-10-13 09:16:225.00元回复 举报就是live555 效率不高

-

浏览量:1213次2024-01-15 15:09:32

-

浏览量:3622次2018-04-26 15:06:40

-

浏览量:1037次2024-02-23 16:58:46

-

2020-10-17 18:27:28

-

浏览量:4559次2020-08-11 10:39:44

-

浏览量:2895次2020-07-29 15:54:29

-

浏览量:7577次2021-07-02 15:09:39

-

浏览量:1006次2023-12-11 11:37:59

-

浏览量:1221次2023-08-30 18:29:34

-

浏览量:3408次2023-12-29 17:55:25

-

浏览量:1955次2023-12-29 17:51:55

-

浏览量:4101次2020-04-26 17:55:16

-

浏览量:4136次2020-08-26 17:39:57

-

浏览量:1171次2024-01-17 11:25:11

-

浏览量:4704次2021-04-27 16:33:22

-

浏览量:5589次2021-04-27 16:33:54

-

浏览量:2855次2023-06-28 17:22:40

-

浏览量:16315次2020-09-28 11:08:13

-

浏览量:4339次2023-12-02 14:17:30

-

广告/SPAM

-

恶意灌水

-

违规内容

-

文不对题

-

重复发帖

Mrs Wu

微信支付

微信支付举报类型

- 内容涉黄/赌/毒

- 内容侵权/抄袭

- 政治相关

- 涉嫌广告

- 侮辱谩骂

- 其他

详细说明

微信扫码分享

微信扫码分享 QQ好友

QQ好友